Installation of a New Camera for Empty Container Detection

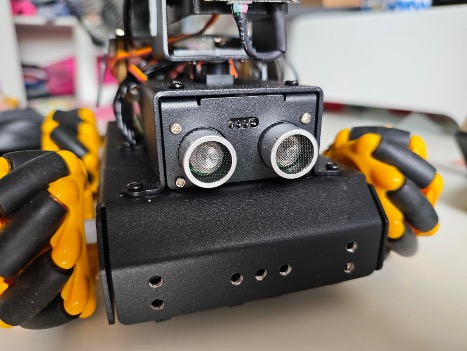

In the initial exploration, our journey for detecting empty food containers began with traditional sensor-based approaches. We first experimented with ultrasonic distance sensors, try to capture the difference in surface height between full and empty containers. After several tryouts, we realized that reflections from sloped metal surfaces, food residue, and steam severely disrupted the readings. And next, we have tried infrared sensors, because of simplicity and affordability, and they are unreliable due to their limited working distance (often below 2 cm) and narrow detection area, not big enough for the containers.

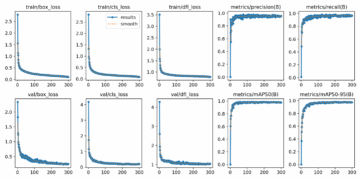

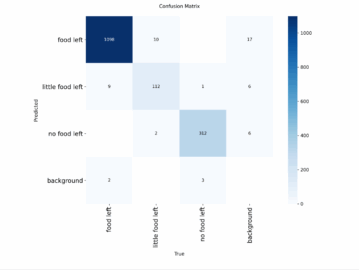

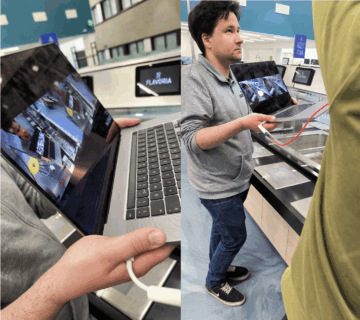

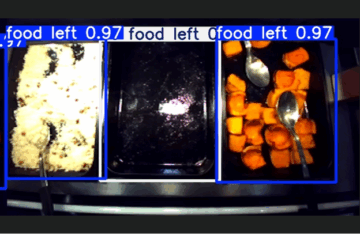

In the following steps, we begin to use the camera based computer vision solutions. Using a camera mounted above the serving line, we captured lunch line container images across different food items, lighting conditions, and filling levels. We then annotated these images into three classes: no food, with little food, and with food. With these three labels, we trained a custom YOLOv11 object detection model, which allowed for real-time classification at the per-vessel level. Training involved multiple epochs on a GPU-backed workstation RTX 4090, with performance validated through different metrics and visual inspection of bounding boxes.

Our model reached high confidence levels in distinguishing between container states. In test deployments, the system is able to detect containers’ status in real-time, feeding detections into a backend dashboard, which can provide warning information to the kitchen staff.